Benefits to Branch Elimination

Mike Acton

April 11, 2006

April 11, 2006

Better Performance Through Branch Elimination

Part 1: IntroductionPart 2: Background on Branching

Part 3: Benefits to Branch Elimination

Part 4: Programming with Branches, Patterns and Tips

Part 5: More Techniques for Eliminating Branches

Additional Examples:

Increment And Decrement Wrapping Values

Occasionally you have a set of values that you want to wrap around as you increment and decrement them. But the straightfoward implementation can have a big impact on processors where comparisons and branches are expensive (e.g. PowerPC). This article presents a straightforward branch-free implementation of these functions.

Choosing to Avoid Branches: A Small Altivec Example

An example of why less instructions doesn't always equal faster code.

Branch-free implementation of half-precision (16 bit) floating point

The goal of this project is serve as an example of developing some relatively complex operations completely without branches - a software implementation of half-precision floating point numbers.

Background

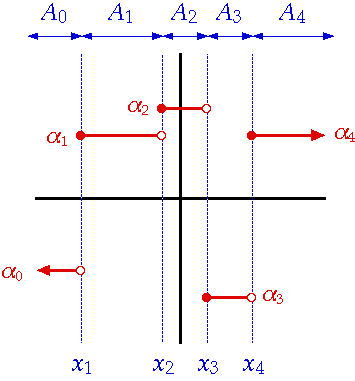

The fundamental principal behind branch elimination is that expressing a value as a simple function of its inputs (a single basic block) is often more efficient than selecting a result through a change in control flow (branching).Take for example, this simple step function:

|

if ( x < x1 )

{

a = a0;

}

else if ( x < x2 )

{

a = a1;

}

else if ( x < x3 )

{

a = a2;

}

else if ( x < x4 )

{

a = a3;

}

else

{

a = a4;

}

|

This function can also be expressed by the following branch-free expression, where x is a unsigned 32 bit integer in the range 0 < x < 0x7fffffff

uint32_t x_lt_x1 = (uint32_t)((int32_t)(x - x1) >> 31); uint32_t x_lt_x2 = (uint32_t)((int32_t)(x - x2) >> 31); uint32_t x_lt_x3 = (uint32_t)((int32_t)(x - x3) >> 31); uint32_t x_lt_x4 = (uint32_t)((int32_t)(x - x4) >> 31); uint32_t result_0 = (x_lt_x4 & a3 ) | (~x_lt_x4 & a4); uint32_t result_1 = (x_lt_x3 & a2 ) | (~x_lt_x3 & result_0); uint32_t result_2 = (x_lt_x2 & a1 ) | (~x_lt_x2 & result_1); uint32_t result = (x_lt_x1 & a0 ) | (~x_lt_x1 & result_2);

Mispredicted branches that continue execution at the wrong address will cause all the instructions that have been executed up to the time the right prediction is found to be canceled, causing a delay in program execution.

Both the PPU and the eight (8) on-board SPUs are in-order, superscalar, RISC platforms designed for one thing - throughput, or executing the maximum number of instructions in the minimum amount of time. In the best case both architectures can issue two (2) instructions per clock cycle. But in order to approach the maximum throughput in performance critical code on either architecture, two things must be true:

- There must be something to execute on each cycle.

- There must be enough instructions between the start of an instruction and the use of its result to cover the latency.

Here is a summary of the best reasons to consider writing branch-free code (i.e. branch elimination):

- Better Code Scheduling

- Easier to profile

- Easier to optimize

- Easier to organize instruction layer.

- More predictable gains through inlining

- Easier to move to SIMD

- Improved accuracy of remaining dynamic branch predictions

And in consideration of the one main objection: Harder to understand

Better Code Scheduling

Regardless of how good the branches are predicted, because branches negatively impact how code is scheduled, there is often benefit to reducing them.Most of the work that the compiler's code scheduler will do will be on basic blocks. A basic block is any series of instructions that can be executed unconditionally. Within a block, the compiler will be able to schedule instructions and manage the registers very well. However it's between basic blocks that things get complicated.

Every branch divides the code into three basic blocks.

For example:

A: do some stuff ...

if ( some_condition )

{

B: do some stuff ...

}

C: do some stuff ...

The scheduler must decide:

- Where to put A,B and C (since they do not need to be contiguous).

- How registers will be allocated inside of A, B and C

- Can some_condition be predicited statically?

- etc.

However, by eliminating the branch altogether the compiler's job is made much easier and better code generation will (often) result. There are no guarantees, of course -- in many situations a branch is exactly what you want.

Less branches means easier to schedule loads and stores together. Although GCC has a limited ability to move loads outside of a branch, it's very conservative. For the PPU and SPU, the most effective pattern is (for all objects in a block) LOAD -> UPDATE -> STORE. Use the large register files and pipeline the loads early. For an example of the effective use of this pattern, see the Box Overlap example.

The advantage of consecutive loads is by pushing the loads as far in advance as possible (which is done by loading to the limit of the available registers) the maximum amount of time can be exploited for data to be loaded from the cache.

Easier to profile

Less branches means more predictable performance. Fundamentally, all branch prediction schemes are concerned with average performance. By contrast,

once data and instruction cache hits are accounted for, branch-free code has constant, fixed performance. This allows programmers who are

optimizing a section of code based on its performance profile with only a small section of either time or data available, to effectively ignore the

impact varying data may have on performance. This property, in turn, makes any evaluation of the performance of other systems (e.g. cache hits) more

accurate and valuable to the programmer.

Easier to test

Less branches means less complexity to test. Approaching full code coverage becomes much easier with every branch

removed. When complex data is mixed with branchy code, there is an explosion of scenarios which must be tested, either

by programmer-devised or automatically-generated tests. Each element of the data must be tested for each branch it may

encounter and every branch must be tested to ensure that it is evaluating the correct data. In branch-free sections of

code, the tests can be limited to concentrate on the data since every element of data will follow

exactly the same path.Additionally, tests are more valuable in branch-free sections of code, since every test must effectively make every possible decision correctly in order to have the correct result. Thus, passing tests on branch-free code are a more accurate and reliable indicator of the validity of the code when compared to testing branchy code, where it can never be gauranteed, simply by looking at the tests, whether or not all the code has even been evaluated once.

Easier to optimize

Easier to organize instruction layer

Expect better (more predictable) code from the compiler. Beyond the scheduling impact, the traces are much simpler and most variables will be write-once (const). Many of the largest failures in optimization are closer to the front-end where the compiler is re-organizing code. Simple traces with write-once variables don't need to be re-organized.

More predictable gains through inlining

Easier to move to SIMD

Effective use of SIMD instructions depends on keeping the pipeline full as long as possible. This generally requires branch elimination. Branch-free scalar code is significantly easier to move to Altivec on the PPU, or the SPU instruction set than branchy code.

Improved accuracy of remaining dynamic branch predictions

Dynamic branch prediction it has its limits, like everything else. Branch prediction is based on taking certain bits of the branch address and looking them up in a branch history table. The table has values that range from very-unlikely to very-likely and the which set of instructions that are fetched are a function of that value. Then, depending on the actual result, the value in the table is adjusted for the next time. Branch history tables are very small though and in all likelyhood there are many branches that will end up using the same entry in the table making it more and more inaccurate.

Harder to understand (?)

Predicated results, or branch-free code, may impact readability somewhat. Programmers are acustomed to reading and writing branchy code. However, much of this can made clearer through well named variables and predicate functions/macros.

About the authors

Mike Acton is a Senior Architect working on PS3/Cell research at Highmoon Studios (Vivendi-Universal Games). Most recently, Mike was the Lead Special Effects Programmer for Darkwatch at Highmoon Studios (previously Sammy Studios) in Carlsbad, CA. Previously Mike has held Lead Programming, Playstation 2 Engine Development and Research roles with Yuke's, VBlank and BlueSky/Titus. He worked for Sony Interactive Studios in PSX development. Mike has made regular appearances as a speaker at SCEA develpment conferences and has spoken at GDC. Mike Acton is not a framework-happy C++ programmer. He actually likes C. And assembly. In his spare time he develops hardware on FPGAs in VHDL.

André de Leiradella started with computers in 1981 with a ZX-81 compatible with a built-in BASIC interpreter. Two years later he was already using assembly to code faster programs in this platform. Today André is a Support Analyst working at Xerox, and uses he's spare time to code pet projects that goes from using script languages to control a VNC server and fixed point expression compilers for J2ME, to a full Java bytecode interpreter.

André has a Computer Science degree and a M.Sc. degree in Artificial Intelligence and has a fairly good knowledge of many areas of Computer Science, managing to dig deep on any specific subject when needed.

May Also Interest You

Performance and Good Data DesignSome simple rules of thumb that programmers can follow to create a solid pipeline from the content creators to the screen and speakers.

Demystifying The Restrict Keyword (Mike Acton)

Optimizing data access is a critical part of good performance. Read on to find out how to use the restrict keyword to open up a whole class of optimizations that were previously impossible for a C compiler.

Avoiding Microcoded Instructions On The PPU (Mike Acton)

Executing instructions from microcode can wreck havok on inner loop performance. Find out which instructions are microcoded and how to avoid them.

luareader [geocities.com] (André de Leiradella)

A library that allows Lua files to be executed from a number of sources.

APF Project [geocities.com] (André de Leiradella)

This library is targetted at programs that need to find paths in small mazes. Diagonal movements are allowed.